THE PRODUCT

Internal hub for genAI use cases

Like many companies, leadership is encouraging IBMers to bring new ideas for generative AI infused products.

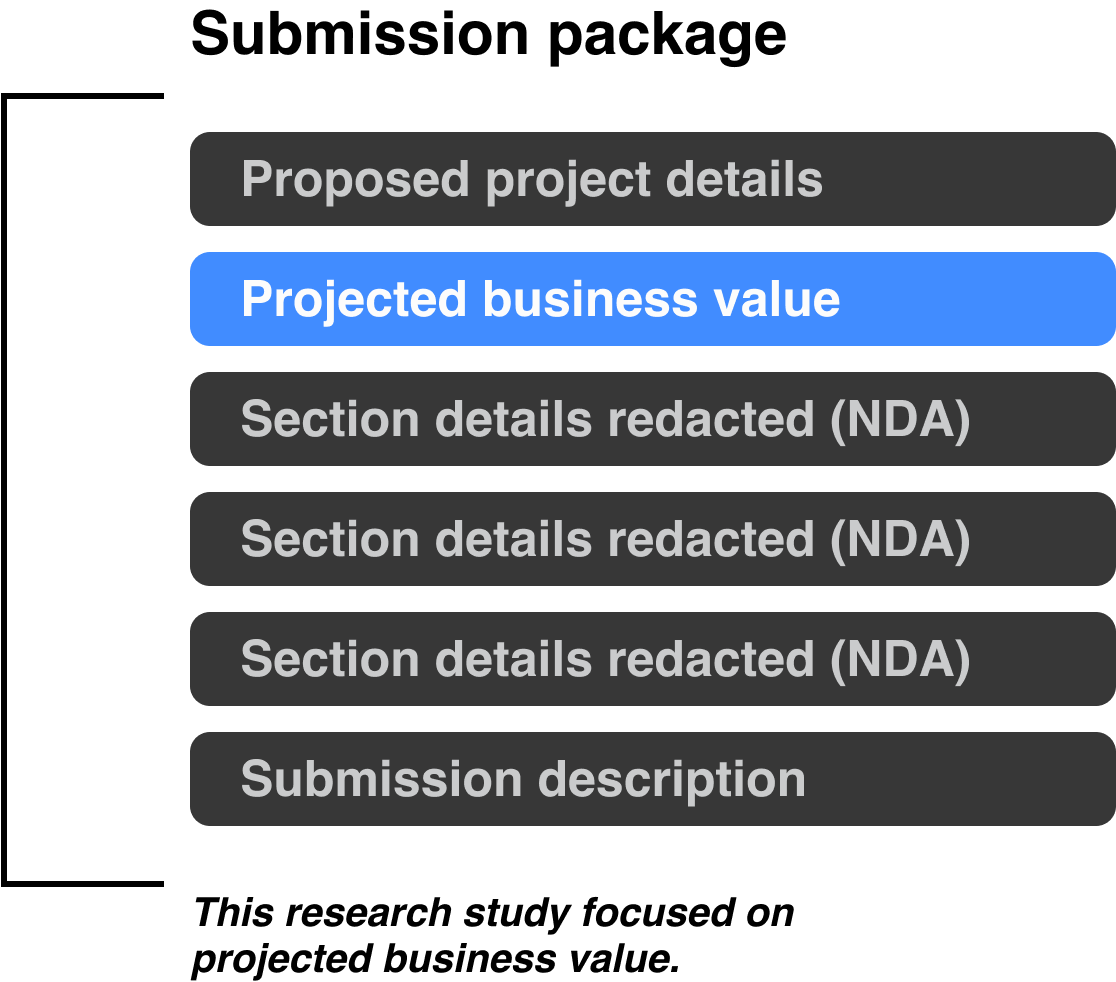

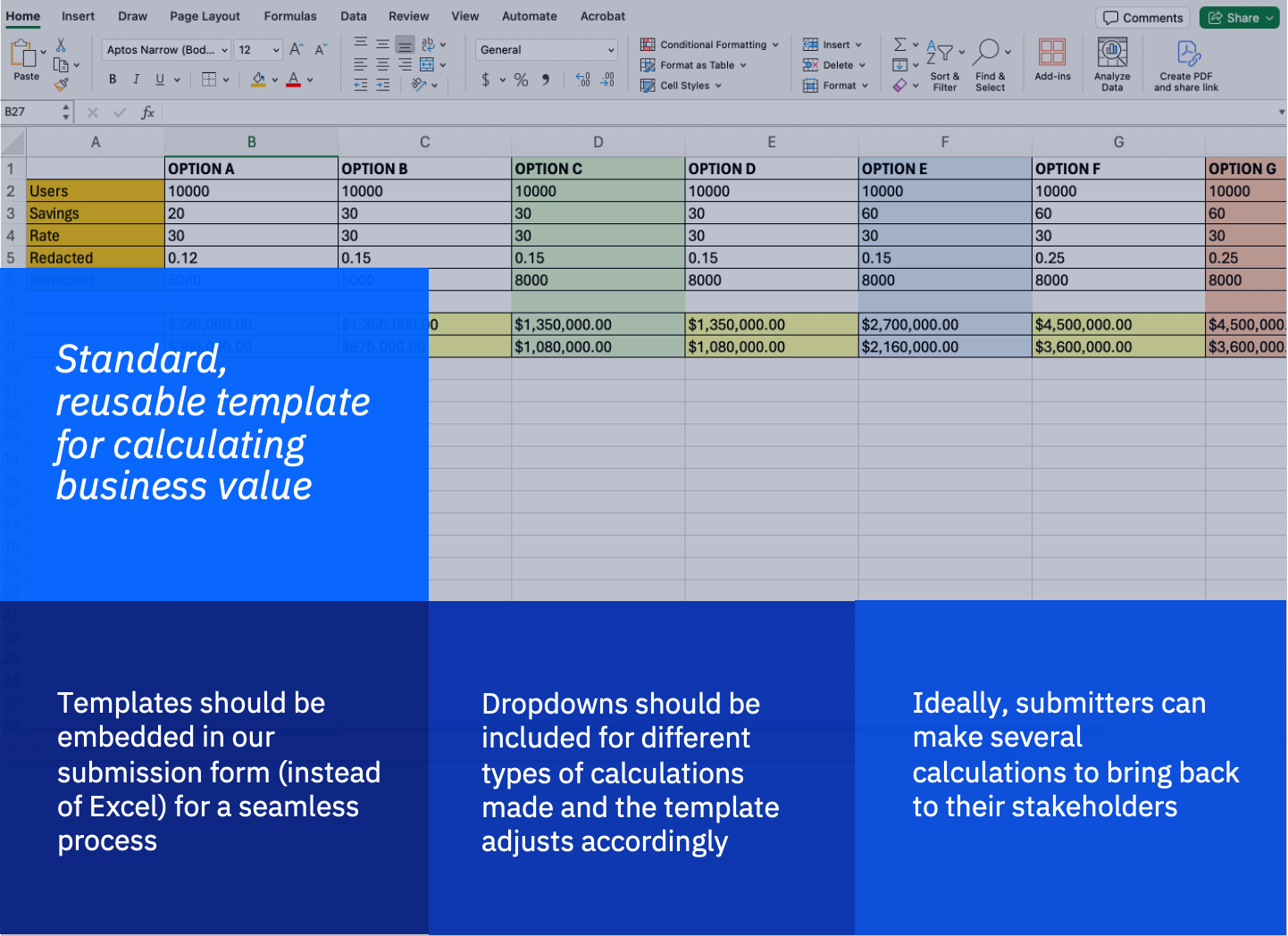

Our product is a review system todecide which ideas IBM organizations will invest in.

We have several reviewers, but lately they’ve had an overwhelming number of new submissions and are falling behind.

Our product is a review system to

We have several reviewers, but lately they’ve had an overwhelming number of new submissions and are falling behind.